The 2026 Guide to Answer Engine Optimization: How to Get Cited by AI

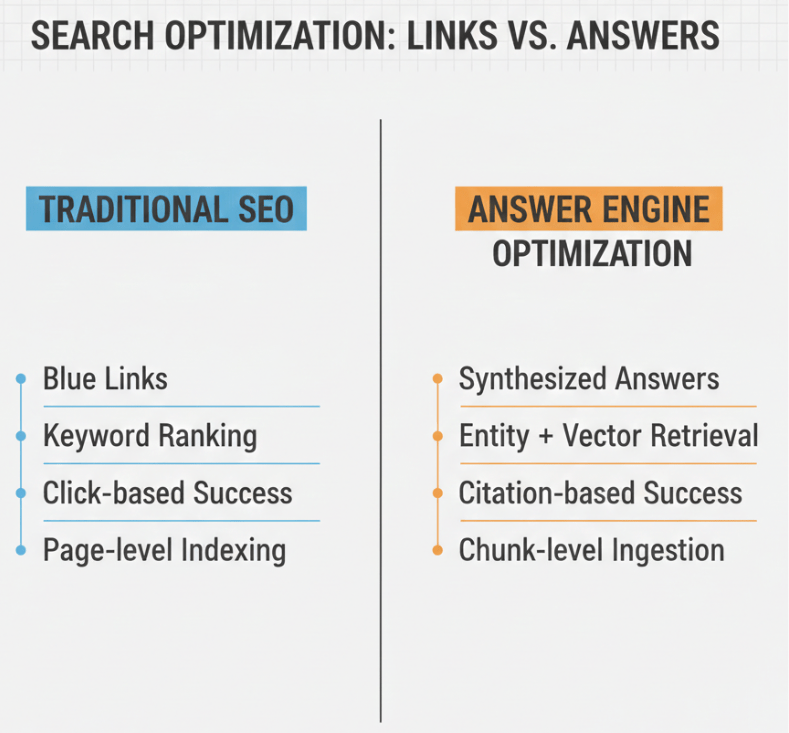

Search behavior has fundamentally shifted. For decades, the goal of optimization was simple: rank on the first page, earn the click, and convert the user to your site. But as we move toward 2026, the traditional search engine results page (SERP) is rapidly being replaced by direct answers synthesized by Large Language Models (LLMs).

This transition from Search Engine Optimization (SEO) to Answer Engine Optimization (AEO) changes the developer’s mandate. You are no longer just building human readability or basic crawler indexing; you are engineering content to be ingested, understood, and cited by AI agents.

In this article, you’ll learn:

- The core differences between traditional indexing and AI retrieval (RAG).

- How to implement robust structured data to ensure entity recognition.

- Strategies for optimizing content for vector databases and context windows.

- Methods to track brand mentions in an era of “zero-click” searches.

From Blue Links to Generative Answers

To understand AEO, we must first look at the underlying technology of platforms like Perplexity, ChatGPT Search, and Google’s AI Overviews. These systems generally utilize Retrieval-Augmented Generation (RAG).

Unlike a traditional search engine that maps keywords to an inverted index, an Answer Engine works in two distinct phases:

- Retrieval: The system queries a vector database to find semantically relevant chunks of information.

- Generation: The LLM processes these chunks within its context window to synthesize a coherent answer.

If your content is unstructured, ambiguous, or buried in complex JavaScript frameworks that resist parsing, the retrieval system will skip it. Consequently, the generation layer will never see your brand, and you won’t be cited.

Structuring Data for Machine Comprehension

The foundation of AEO is explicit semantic clarity. While an LLM can infer meaning from unstructured text, relying on inference is risky. You need to provide deterministic signals about who you are and what you do.

Implementing JSON-LD Schema at Scale

For developers, JSON-LD is the most effective way to communicate with answer engines. By 2026, standard schema implementation will be table stakes. To gain a competitive edge, you must go deeper into the hierarchy.

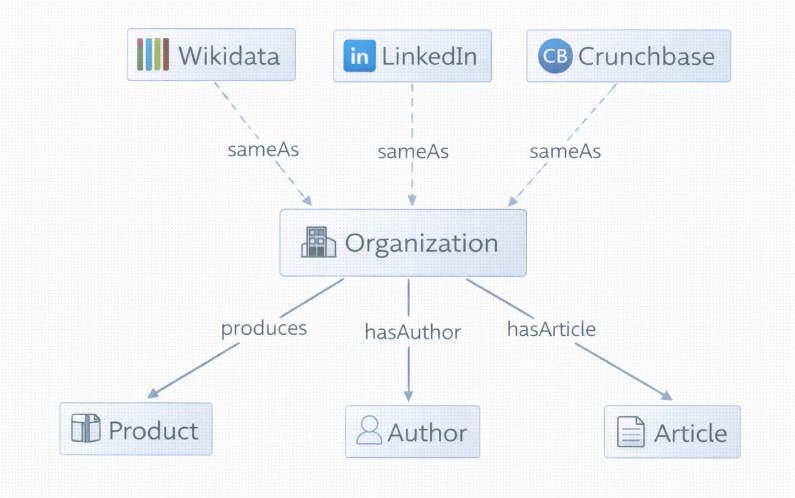

- Entity Linking: Don’t just tag your organization. Use sameAs properties to link your organization to trusted knowledge bases like Wikidata, Crunchbase, or LinkedIn. This helps the AI resolve your brand’s identity and prevents hallucinations.

- Speakable Schema: As voice and conversational search grow, ensuring sections of your content are marked as speakable can increase the likelihood of inclusion in voice responses.

- Tech-Specific Types: If you are marketing a SaaS product or a developer tool, utilize specific schemas like SoftwareApplication or TechArticle.

The Knowledge Graph Advantage

Answer engines rely heavily on Knowledge Graphs to understand the relationships between entities. If your brand is an isolated node in the graph, it lacks authority.

To integrate better:

- Consistency is Key: Ensure your NAPs (Name, Address, Phone) and corporate data are identical across every API endpoint and directory.

- Semantic Triples: Structure your content to reinforce Subject-Predicate-Object relationships (e.g., “Product X [Subject] integrates with [Predicate] Python [Object]”). This simple sentence structure is easier for knowledge of extraction algorithms to parse.

Optimizing for Vector Search and RAG

Once your structure is sounding, you must optimize the content for retrieval. In a RAG workflow, content is broken down into “chunks” and converted into vector embeddings. If your content is poorly formatted, it results in “noisy” vectors that lower similarity scores.

High-Context Chunking

AI models prefer high information density. Fluff content dilutes the semantic meaning of a chunk. For developers, this means adopting a technical writing style that prioritizes precision.

- The Inverted Pyramid: Place the direct answer, the code snippet, or the definition at the very top of the block.

- Q&A Formatting: Structure headers as natural language questions. This aligns your content vectors closer to the user’s query vectors.

- Clean Code Blocks: When sharing documentation, ensure code blocks are well-commented. Comments act as natural language anchors that help the AI understand the function of the code, making it more retrievable.

Format Stability

Answer engines struggle with content that shifts layout (CLS) or requires heavy client-side rendering. For optimal AEO:

- Prioritize Server-Side Rendering (SSR) or Static Site Generation (SSG).

- Ensure that core textual content is available in the raw HTML response.

- Use semantic HTML5 tags (<article>, <section>, <aside>) to help the parser distinguish between primary content and navigational noise.

Building Brand Authority in the AI Era

In the AEO landscape, authority isn’t just about backlink volume; it’s about corroboration. An AI is more likely to cite your brand if it sees your information corroborated across multiple high-authority sources in its training data or retrieval index.

The “Cite-ability” Factor

To increase your chances of being the primary citation:

- Publish Original Data: AI models are hungry for unique statistics and benchmarks. If you release a “2026 State of DevOps” report with unique data points, you become the primary source node that other answers must reference.

- Quotable Expert Opinions: Include clear, concise quotes from your internal subject matter experts.

- Digital Footprint Management: Ensure your documentation and API references are hosted on high-uptime, fast-loading infrastructure. If an answer engine times out trying to retrieve your context, it will move to a competitor.

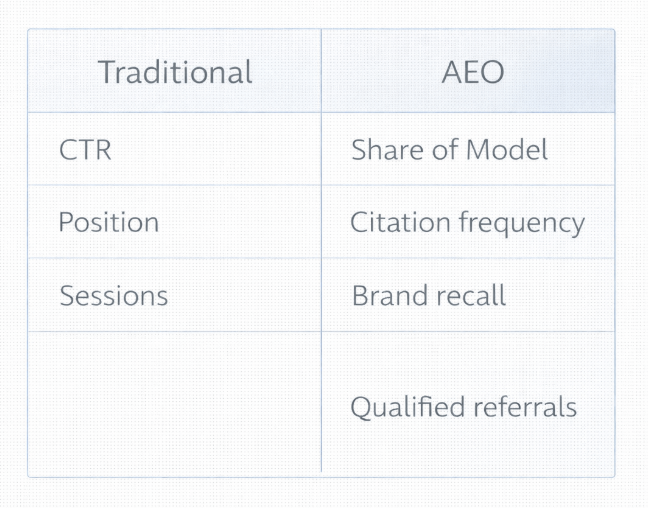

Measuring Success in AEO

Traditional metrics like Click-Through Rate (CTR) are less relevant when the user consumes the answer directly on the search interface. Instead, shift your analytics focus to:

- Share of Model (SoM): Tracking how often your brand appears in AI-generated responses for your target keywords.

- Brand Search Volume: As users learn about your solution through AI answers, they should eventually search for your brand directly.

- Referral Traffic Quality: While volume may drop, the intent of users clicking through AI citations is often higher, as they have already been “qualified” by the answer engine.

Future-proofing Your Strategy

The transition to Answer Engine Optimization requires a shift in mindset from “ranking” to “teaching.” You are essentially teaching an AI how to represent your brand and your technology.

By focusing on rigorous data structuring, optimizing vector retrieval, and maintaining high-quality, dense information, you ensure that when an AI is asked a question about your industry, your brand provides the answer.

Leave a comment