Orchestrating multi-agent workflows: A guide for AI developers

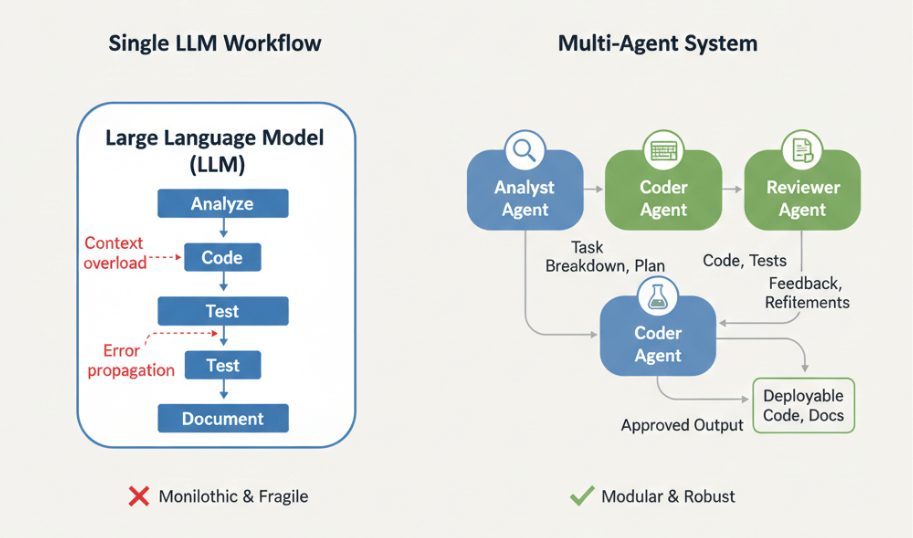

Single Large Language Model (LLM) calls are impressive, but they often hit a ceiling when faced with complex, multi-step problems. If you’ve ever tried to force one prompt to handle data extraction, code generation, and quality assurance simultaneously, you’ve likely seen the hallucinations and logic errors that follow.

The industry is shifting toward multi-agent architectures systems where specialized agents collaborate to solve intricate tasks. Rather than a solitary genius, you build a team of experts.

In this guide, you’ll learn:

- Why multi-agent systems outperform monolithic LLM calls.

- The core patterns of agent orchestration.

- How to build a functional workflow using popular frameworks.

- Real world strategies for handling state and errors between agents.

Why move beyond single agent systems?

For simple queries, a single agent (like a chatbot wrapper around GPT 4) is sufficient. However, enterprise grade development often requires distinct cognitive processes.

When you ask a single model to “write code, test it, and document it,” context windows get crowded. The model might prioritize the code at the expense of the documentation or forget the specific testing framework you mentioned three paragraphs ago.

Multi-agent workflows solve this by decoupling responsibilities. You assign one agent to be the “Coder,” another to be the “Reviewer,” and a third to be the “Tech Writer.” This specialization leads to:

- Improved Accuracy: Each agent focuses on a narrower context, reducing hallucinations.

- Modularity: You can swap out the “Coder” model (e.g., to a model fine-tuned on Python) without affecting the “Writer” agent.

- Scalability: Complex tasks are broken down into parallel or sequential sub tasks.

Core orchestration patterns

Before writing code, you need to choose an architecture. Orchestration is the logic that governs how your agents talk to each other. Here are the three most common patterns used in production today.

1. Sequential Handoffs (The Chain)

This is the simplest workflow. The output of Agent A becomes the input for Agent B.

- Use case: A content pipeline where Agent A outlines a blog; Agent B writes the draft, and Agent C edits it.

- Pros: Easy to debug and implement.

- Cons: Brittle; if Agent A fails, the whole chain breaks.

2. The Central Orchestrator (The Hub and Spoke)

A central “Manager” LLM analyzes the user request and delegates tasks to specific worker agents. The workers report back to the manager, who aggregates the results.

- Use case: A customer support bot. The Manager decides if the query is a “Refund request” (sends to Billing Agent) or a “Bug report” (sends to Technical Agent).

- Pros: Highly flexible and handles dynamic workflows well.

- Cons: The Manager can become a bottleneck and consume significant token resources.

3. State Flow / Graph Logic

Agents act as nodes in a graph. Transitions between agents are determined by conditional logic (if X happens, go to Agent B; if Y happens, go to Agent C).

- Use case: Code generation with a feedback loop. The “Coder” writes a script; the “Tester” runs it. If it fails, the flow loops back to “Coder” with error logs. If it passes, it moves to “Deploy.”

- Pros: Robust and mimic human problem solving loops.

- Cons: More complex design and visualize.

Building a multi-agent workflow: A practical example

Let’s walk through designing a Graph based workflow for a common developer task: Automated Code Review and Refactoring.

We will conceptualize this using a framework agnostic approach, compatible with tools like LangGraph or AutoGen.

Step 1: Define the agents

First, we identify the specialized roles needed.

- Agent 1 (The Analyst): Reads the codebase and identifies performance bottlenecks or security risks.

- Agent 2 (The Refactorer): Takes the specific issues found by the Analyst and rewrites the code block.

- Agent 3 (The Validator): Reviews the new code to ensure it compiles and adheres to style guides.

Step 2: Define the shared state

Multi-agent systems need a “memory” that persists across the workflow. This shared state usually looks like a JSON object passed between agents.

{

“original_code”: “def process_data(x): …”,

“identified_issues”: [],

“refactored_code”: null,

“validation_status”: “pending”,

“iteration_count”: 0

}

Step 3: Orchestrate the logic

Here is where we script the interaction. We want a loop that ensures quality before finalizing.

- Entry Point: The system receives original_code.

- Analyst Node: The Analyst populates identified_issues.

- Condition: If no issues are found, jump to End.

- Refactorer Node: The Refactorer reads identified_issues and generates refactored_code.

- Validator Node: The Validator checks refactored_code.

- Condition: If validation_status is “Approved”, output the code.

- Condition: If validation_status is “Rejected”, increment iteration_count and loop back to the Refactorer with the Validator’s feedback.

Step 4: Implementation considerations

When implementing this using tool like LangChain or Semantic Kernel, keep these technical tips in mind:

- Temperature Control: Keep the “Validator” agent at a low temperature (near 0) for consistent, rigorous checking. The “Refactorer” might benefit from a slightly higher temperature (0.2 0.4) for creative problem solving.

- Circuit Breakers: In a loop architecture (like step 3), always include a maximum iteration count (e.g., max_iterations=3). Otherwise, two agents might get stuck in an infinite loop of correcting each other, burning through your API budget.

Managing complexity: Frameworks vs. Custom Code

Should you use an existing framework or write your orchestration logic in raw Python?

Use a Framework (LangGraph, AutoGen, CrewAI) if:

- You need standardized tracing and debugging tools.

- You want to build support for memory persistence and state management.

- You plan to visualize the graph complexity.

Use Custom Python logic if:

- Your workflow is deterministic (purely sequential).

- You need extreme latency optimization (frameworks add overhead).

- You are integrating with legacy non-AI systems that don’t fit framework abstractions.

Key takeaways for developers

Orchestrating multi-agent workflows is the next step in AI maturity. By moving away from “God mode” prompts and toward specialized, collaborative systems, you can build applications that are more reliable and capable of handling ambiguity.

To summarize:

- Decompose tasks: Break complex requests into single responsibility units.

- Choose the right pattern: Use specific patterns like sequential chains for simple pipelines and graph logic for iterative problem solving.

- Manage state: Ensure your agents share a structured context object.

- Guardrails are mandatory: Implement iteration limits and validation checks to prevent run away loops.

Ready to start building? We recommend exploring tools like n8n for visual orchestration or LangGraph for code first graph definitions to get your first multi-agent system running this week.

Leave a comment