From Chatbots to Action Oriented AI: The Shift to Executing Systems

For the last few years, the AI narrative has been dominated by conversational capabilities. Large Language Models (LLMs) like GPT 4 and Claude 3 dazzled the industry with their ability to parse natural language, generate code snippets, and hold coherent conversations. But for developers and enterprises building robust applications, the novelty of a “chatty” bot is wearing off.

The industry is pivoting toward a more pragmatic and powerful paradigm: Action Oriented AI.

This transition marks a fundamental shift from systems that merely retrieve information or simulate conversation to systems that autonomously execute complex workflows. In this article, we’ll explore the technical evolution from static chatbots to dynamic agents, how this impacts your development stack, and what it means for the future of automated software engineering.

The Limitations of “Talking” Systems

To understand why the shift to action-oriented AI is necessary, we first need to look at the bottlenecks inherent in standard conversational interfaces.

Traditional LLM implementations operate primarily as information retrieval and synthesis engines. When a user prompts a model, the system:

- Ingests the tokenized input.

- References its pre-trained weights (and potentially a RAG vector database).

- Predicts the next probable token to generate a response.

While this is excellent for drafting emails or explaining code concepts, it lacks agency. A standard chatbot is passive. It cannot modify a database, deploy a commit to production, or navigate a CRM to update a lead status without extensive, hard coded scaffolding.

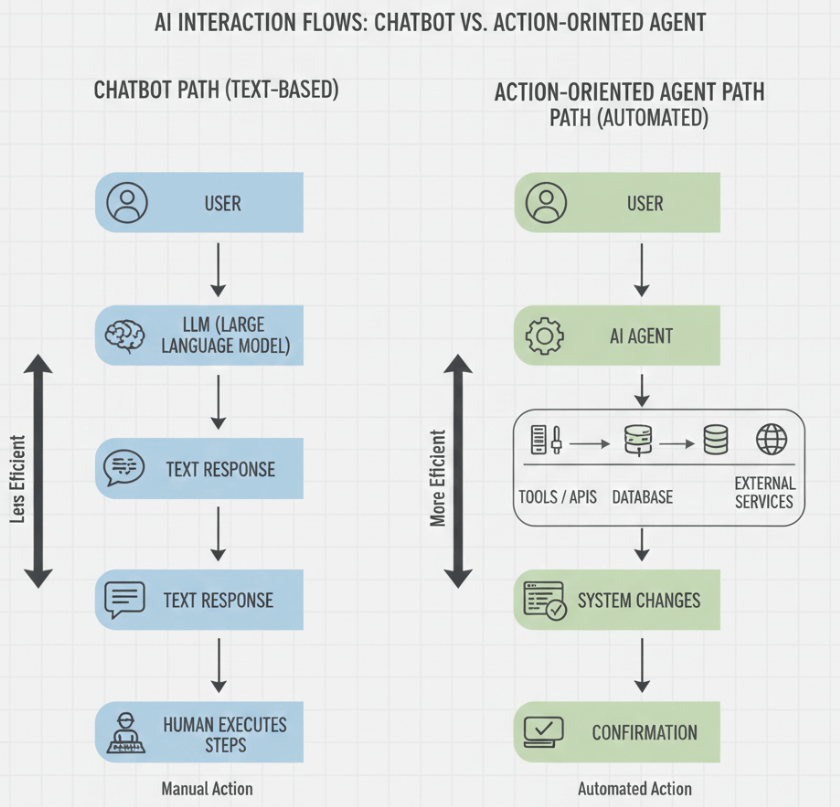

For developers, this limitation creates a “human in the loop” dependency. The AI suggests a solution, but the human must copy paste the code, run the terminal command, or click the button. Action oriented AI removes this friction by granting the model the tools and permissions to execute these steps itself.

Defining Action Oriented AI (Agentic AI)

Action-oriented AI, often referred to as Agentic AI, involves wrapping LLMs in a control loop that allows them to reason, plan, and act. Instead of just generating text, these systems generate function calls or API requests.

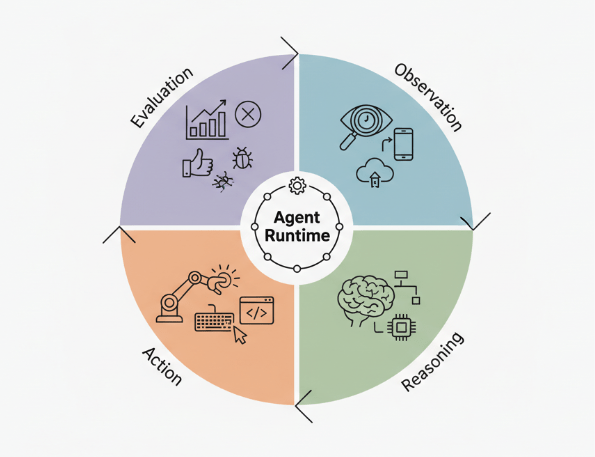

These systems operate on a loop often described as ReAct (Reasoning + Acting):

- Observation: The agent perceives the state of the environment (e.g., reading a GitHub issue).

- Reasoning: The model plans the necessary steps to resolve the request.

- Action: The agent executes a tool (e.g., calling a Python script or an external API).

- Evaluation: The agent analyzes the output of that action and decides if the task is complete or if further actions are required.

This allows developers to move from building “knowledge engines” to building “task engines.”

Key Components of an Executing System

Transitioning from a chatbot to an executing agent requires a different architectural approach. You aren’t just managing context windows; you are managing tool definitions and permissions.

1. Tool Use and Function Calling

The core enabler of action-oriented AI is the ability of models to output structured data (like JSON) specifically designed to match function signatures.

Modern frameworks such as OpenAI’s function calling API or LangChain’s tool abstractions allow you to define a set of capabilities the AI can access. For example, you might define a tool called run_sql_query or send_slack_notification. The model doesn’t execute the code itself; it acts as the orchestrator, requesting that the application execute the specific function with the parameters it has generated.

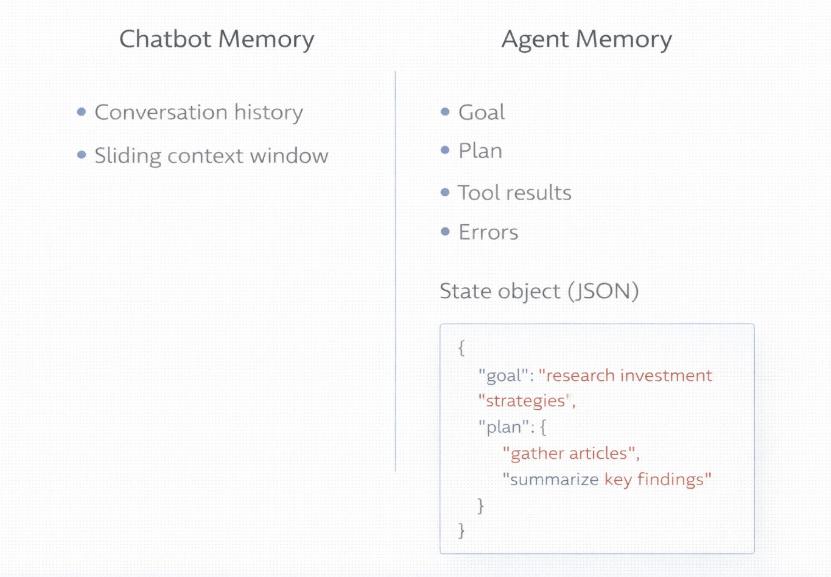

2. The Context Loop and Memory

In a simple chatbot, memory is usually a sliding window of conversation history. In an action-oriented system, memory must be more robust. The system needs to track:

- The initial goal: What was the user’s objective?

- The plan: What steps does the agent decide to take?

- Action outputs: What happened when the agent tried to execute step 1? Did it fail? If so, the memory needs to retain that error log so the agent can correct its course in step 2.

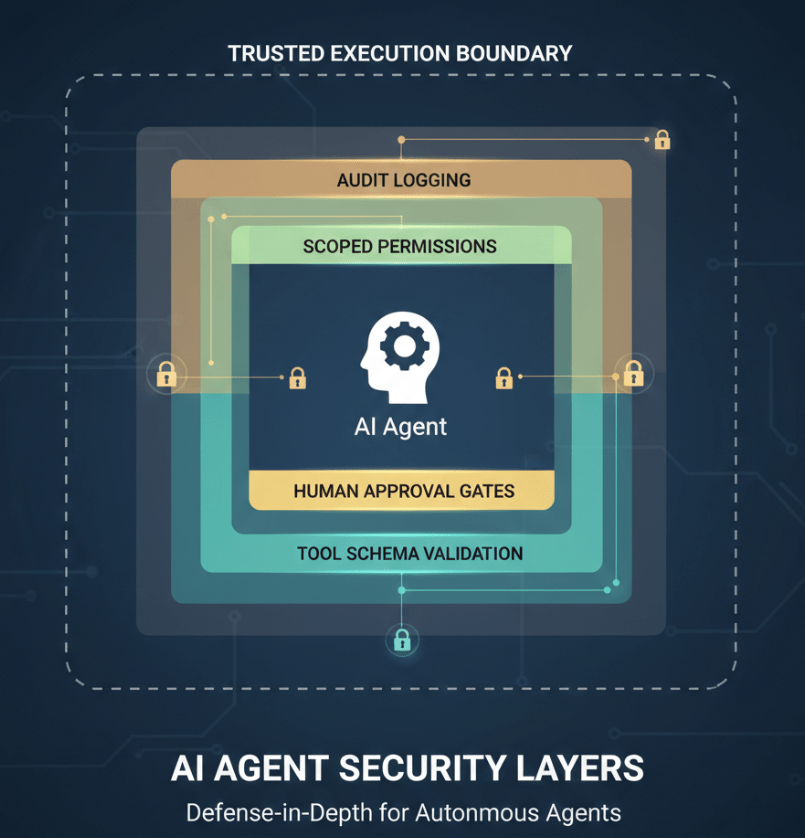

3. Permissions and Safety (The “Guardrails”)

When an AI can execute SQL queries or hit internal APIs, safety becomes critical. You cannot simply give LLM root access to your infrastructure.

Developers building executing systems must implement strict guardrails. This often involves:

- Human in the loop approval: Requiring user confirmation before high stakes actions (like deleting a database table).

- Scoped API keys: ensuring the agent only has access to the specific endpoints required for the task.

- Deterministic outputs: Using tools that enforce strict schema validation to prevent the model from “hallucinating” invalid parameters.

Real World Application Scenarios

How does this look in practice? Let’s contrast a chatbot workflow with an action-oriented workflow in a DevOps scenario.

The Chatbot Scenario:

- User: “Why is the production server throwing 500 errors?”

- Chatbot: “500 errors usually indicate a server-side issue. You should check your application logs, verify your database connection, and ensure your recent deployment didn’t introduce a bug.”

- Result: Helpful advice, but the developer still has to go do the work.

The Action Oriented Scenario:

- User: “Why is the production server throwing 500 errors?”

- Agent: Connects to Datadog API. Retrieves recent error logs. Identifies a timeout error in the payment gateway service. Check out the latest Git commit history.

- Agent Response: “I detected a spike in timeout errors on the payment gateway starting at 14:00 UTC, which correlates with commit 8f3a2b. I can roll back this commit or create a Jira ticket for the backend team. How would you like to proceed?

- Result: The diagnosis is done. The solution is ready to be executed.

Architecting for Scalability and Performance

Building these systems introduces new challenges regarding latency and cost.

Optimizing Latency

Action loops can be slow. If an agent needs to perform three distinct reasoning steps and two API calls to answer a question, the user might be waiting 30+ seconds. To mitigate this:

- Parallel execution: Where possible, design agents that can execute independent tools simultaneously rather than sequentially.

- Smaller, specialized models: You don’t always need GPT 4 for every step. Routing simple tasks to smaller, faster models (like Llama 3 8B or Haiku) can significantly reduce latency without sacrificing accuracy for rote tasks.

Integration with Existing Stacks

You don’t need to rebuild your infrastructure to accommodate action-oriented AI. The goal is seamless integration.

- Python Ecosystem: Leveraging libraries like Pydantic for data validation allows you to easily map your existing Python functions to AI tools.

- API Gateways: Treat your AI agent as just another client consuming your internal APIs. This ensures that your existing authentication and logging standards apply to the AI just as they do to human users.

The Future of AI is Agentic

The transition from chatbots to action-oriented AI represents the maturity of the generative AI landscape. We are moving past the “wow” factor of text generation and into the “ROI” phase of task automation.

For developers, this is an opportunity to build tools that don’t just help users work but do the work. By focusing on tool definitions, robust reasoning loops, and safety guardrails, you can build systems that deliver genuine operational efficiency and high-performance results.

To further improve your development process and build reliable AI powered automation right now, start by auditing your current workflows to identify where “advice” can be replaced with “execution.”

Leave a comment